Introduction

Web scraping is extracting data from websites using automated tools or scripts to collect information at scale. Due to the vast amounts of structured and unstructured data available on the Internet, this practice has gained a lot of popularity, as the data can be analyzed and used for various purposes, ranging from competitive research to market analysis. In the food industry, scraping can be extremely valuable in gathering information related to menu data, prices, or special offers from fast-food companies for businesses, developers, or analysts.

McDonald's, a major player in the fast food sector, maintains an expansive web presence that includes exhaustive information about its menu options on its websites and apps. Extract McDonald's Menu Data with Python and LXML to derive crucial insights on product assortment, pricing policies, and regional differences. We will discuss the steps taken in extracting and processing such vital data in McDonald's Menu Data Scraping Using Python and LXML. McDonald's Menu Information Scraping with Python and LXML provides ample chances to discover McDonald's menu trends, price revisions, and regional menu variations.

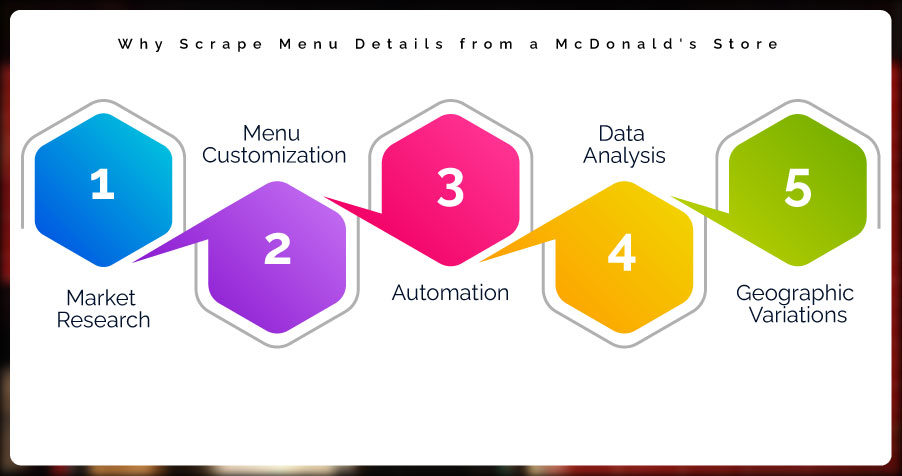

Why Scrape Menu Details from a McDonald's Store?

There are numerous reasons why Web Scraping Food Delivery Data from a McDonald's store can be helpful:

1. Market Research: Analysts or competitors may want to study McDonald's menu to analyze their pricing strategies, seasonal menu items, or promotional offers. Scrape McDonald's Menu Details using Python and LXML to collect detailed information, including new menu introductions and limited-time offers.

2. Menu Customization: Some developers or restaurant owners may wish to create menu scraping tools to enhance their apps or services. For instance, a mobile app that helps users find nearby restaurants might use Web Scraping McDonald's Menu with Python and LXML to present the latest offerings.

3. Automation: Regularly tracking changes in McDonald's menu can be automated using scraping. For example, if McDonald's frequently updates its menu, McDonald's Menu Data Extraction with Python and LXML can track these changes without manual intervention.

4. Data Analysis: Researchers or data scientists might want to analyze McDonald's menu data for patterns, such as which items are most frequently featured, which are seasonal, or how pricing changes over time. Mcdonald's Restaurant Data Scraping can help analyze this large dataset effectively.

5. Geographic Variations: McDonald's offers regional menus in different parts of the world. Extract McDonald's Food Delivery Data to help analyze these regional differences, especially for businesses seeking to understand global consumer trends. Mcdonald's Food Delivery Data Scraping enables comprehensive access to these varied menu items.

With these motivations in mind, we now turn to the practical aspect of Restaurant Menu Data Scraping from a McDonald's store using Python and LXML.

Role of McDonald's Menu Data Scraping Using Python and LXML In Supporting Market Research

McDonald's Menu Data Scraping Using Python and LXML is paramount in supporting market research with all the details of the offerings, pricing strategy, and promotions. Businesses and analysts track menu changes, seasonal promotions, and pricing trends for regions with the help of McDonald's websites. This kind of information obtained from Restaurant Data Intelligence Services is useful to determine the brand's competitive positioning, understand consumer preferences, and identify food trends early on.

It also helps to create potent tools, such as the Food Price Dashboard, an aggregation of pricing data offering an overview of how prices changed over time. Accessing such data would allow researchers to analyze pricing strategies and estimate future market movements. This data also benefits Food Delivery Intelligence Services, enabling them to offer actionable insights for optimizing menu offerings and enhancing customer experience. Ultimately, McDonald's Menu Data Scraping provides a foundation for data-driven decision-making in the fast-food industry.

Prerequisites

Before beginning the scraping process, you must install the necessary tools and libraries. The key tools we'll be using are Python, LXML, and Requests.

Installing Python and Required Libraries

1. Python: Python is a widely used programming language for web scraping. If you still need to install Python, download it from the official website python.org.

2. LXML: LXML is a powerful library for processing XML and HTML documents. It is particularly useful for web scraping because it efficiently parses large HTML pages.

To install LXML, use the following command:

pip install lxmlRequests:The Requests library allows you to send HTTP requests to fetch content from websites. Install it using:

pip install requestsOnce you've installed these libraries, you can begin scraping data.

Steps to Scrape Menu Details from a McDonald's Store Using Python and LXML

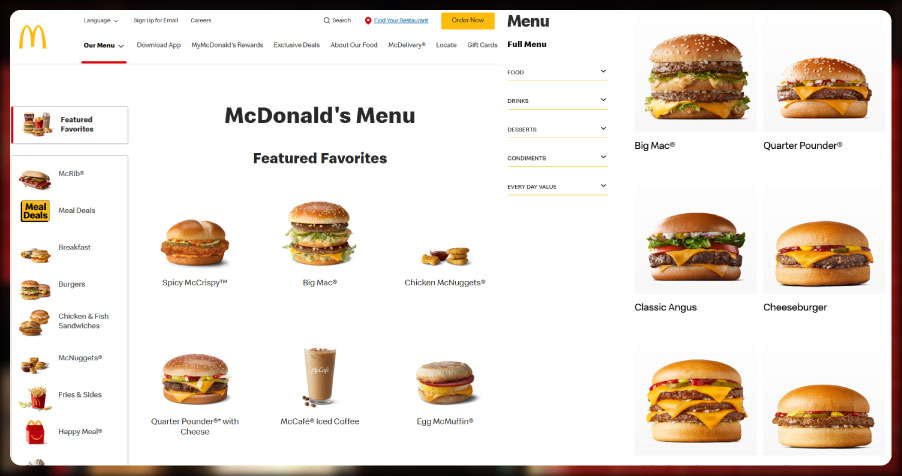

Step 1: Identify the Target URL

The first step in web scraping is to identify the McDonald's menu page URL you wish to scrape. McDonald's websites vary by country and region, so you must select the correct URL.

For instance, McDonald's USA has a specific menu page at:

- https://www.mcdonalds.com/us/en-us/full-menu.html

For McDonald's Australia, you might use:

- https://mcdonalds.com.au/menu

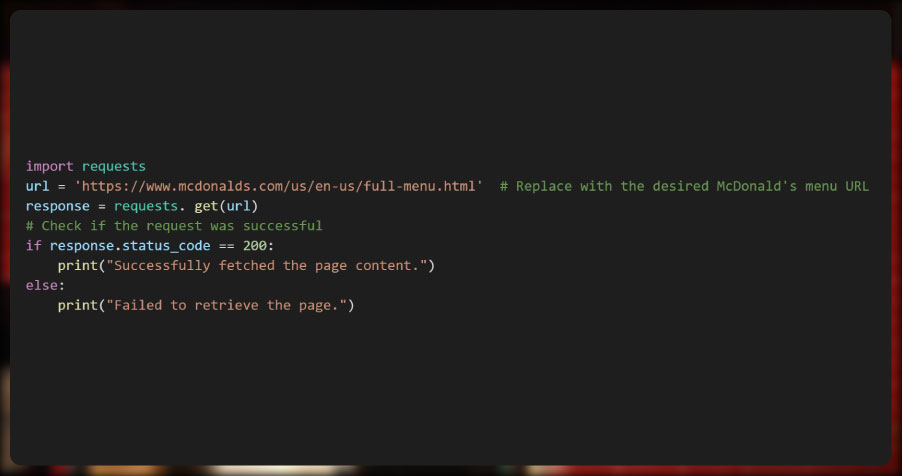

Step 2: Send a Request to Fetch the Web Page

Once you've identified the URL, the next step is to send a request to fetch the page's HTML content. You can do this using the requests library.

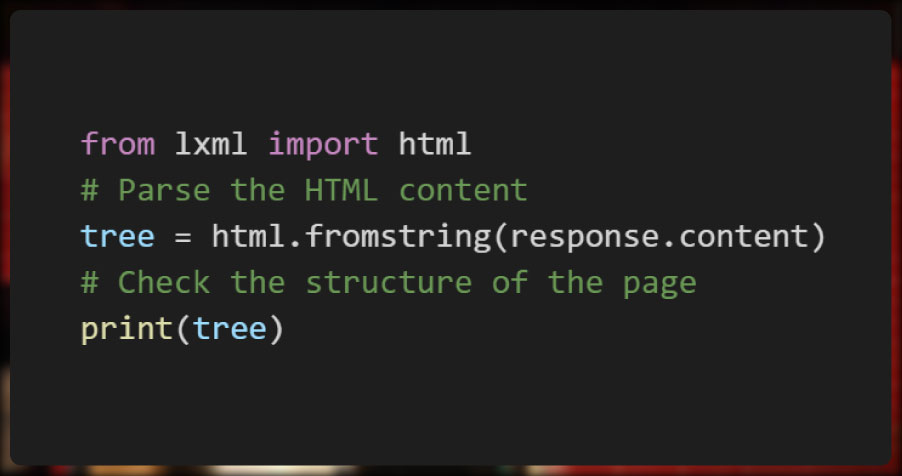

Step 3: Parse the HTML Content Using LXML

After you have retrieved the page's HTML content, the next step is to parse it using LXML. This allows you to navigate and extract specific elements from the page. By using Food Delivery Scraping API Services, you can streamline this process and efficiently handle large amounts of menu data. LXML is particularly useful for its speed and ease of use, making it a popular choice for web scraping tasks like extracting detailed information from a McDonald's menu.

Step 4: Inspect the Page Structure

Inspecting the HTML structure of the McDonald's menu page is crucial to identifying where the relevant menu details are located. You can do this using browser developer tools (right-click the webpage and select "Inspect") or by analyzing the page source.

For example, McDonald's menu items might be listed in specific div tags, with classes such as menu items or menu descriptions. The XPath or CSS selectors you use to extract the data depend on the page's structure.

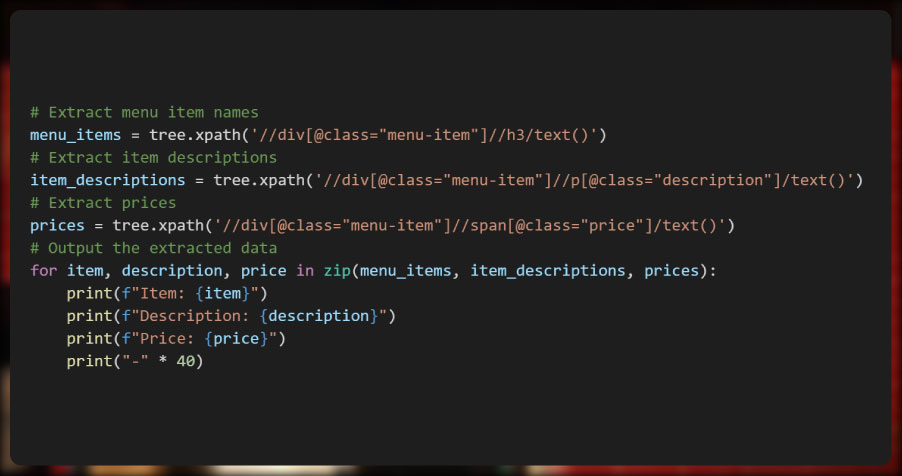

Step 5: Extract Menu Details Using XPath

Now that you have parsed the page, you can use XPath to extract the relevant menu items. XPath is a language used to navigate XML documents, and LXML provides robust support.

For instance, to scrape all menu items, you might use the following XPath:

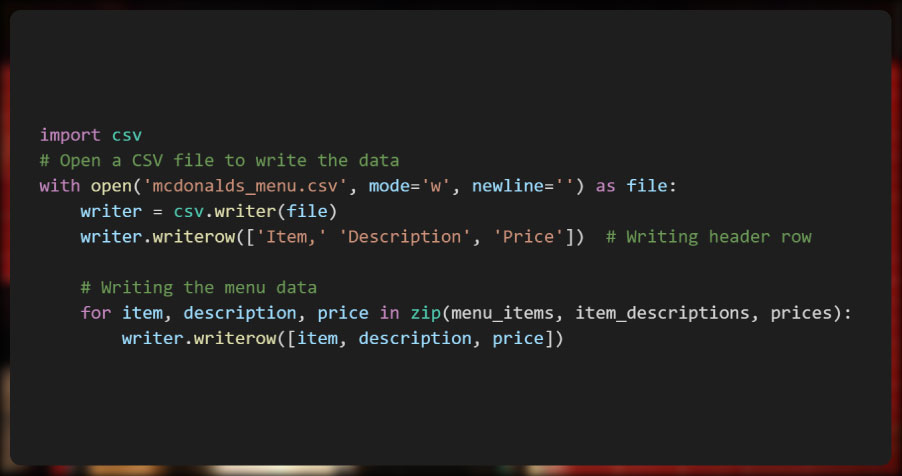

Step 6: Store the Data

Once you have successfully extracted the menu data, you can store it in a structured format, such as a CSV file, for further analysis. The Python csv library can help with this.

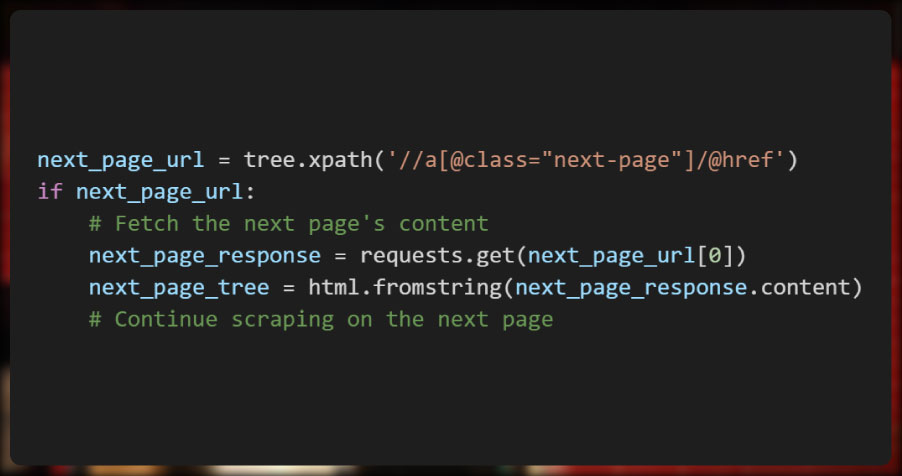

Step 7: Handle Pagination

The menu is often spread across multiple pages, especially if the website has a large selection of items. To handle pagination, follow the "Next Page" links and repeat the scraping process on each subsequent page.

You can find the link for the next page by inspecting the page's HTML structure. Typically, next-page links are located within tags with a class such as next-page or pagination.

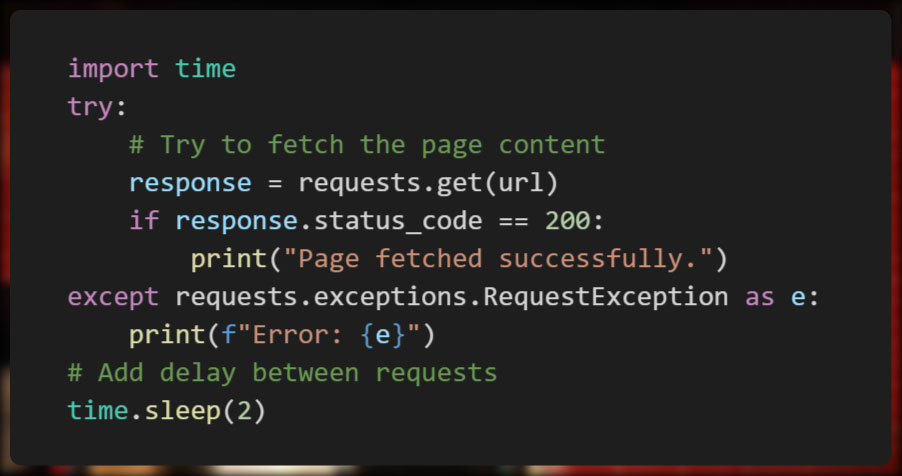

Step 8: Error Handling and Delays

Web scraping can sometimes lead to errors or be blocked by websites. To avoid being blocked, it's good practice to:

- Add delays between requests to mimic human browsing behavior (e.g., using time.sleep()).

- Implement error handling to catch and manage any issues during scraping.

Conclusion

Scraping McDonald's menu details can be valuable for gathering data about their offerings, promotions, and regional differences. Using Python and LXML, you can efficiently automate the process of extracting menu details and storing the data for further analysis or integration into other applications. This can create a comprehensive McDonald's Food Dataset, which can be analyzed for trends, pricing, or seasonal variations.

While Food Delivery Data Scraping Services offers a powerful way to collect data, respecting the website's terms of service and avoiding overloading the site's server with excessive requests is essential. Always consider ethical and legal aspects when scraping and ensure that you are not violating any terms of use. Additionally, businesses and developers can use McDonald's Food Delivery Scraping API Services to automate data extraction more effectively and ensure that their applications run smoothly without overburdening the server.

If you are seeking for a reliable data scraping services, Food Data Scrape is at your service. We hold prominence in Food Data Aggregator and Mobile Restaurant App Scraping with impeccable data analysis for strategic decision-making.