The Client

Our client, a bigwig in the grocery industry, has sought our assistance to benefit from our grocery app scraping API. Their objective is to collect information on all the leading grocery stores in India. Their idea in using our API is to obtain up-to-date details about the stocks, prices, and customer’s preferences for the products. By adopting this approach, they will be able to improve their market knowledge, inventory control, and competitiveness within the volatile grocery industry.

Key Challenges

Data Volume: One of the main challenges encountered when scraping multiple grocery stores apps was dealing with the large amount of data collected. The abundance of information required efficient storage, processing, and analytical capacity.

Data Consistency: Another problem was maintaining data integrity and quality across grocery stores’ applications. The acquired data may have different formats, structures, or frequency of updates in each app, which might make it difficult to harmonize them.

Compliance and Legal Issues: Compliance was a challenge due to data privacy regulations and the terms of service of the different grocery apps. It was important to ensure that the scraping activities would not be unlawful or infringe on any of the terms or regulations.

Key Solutions

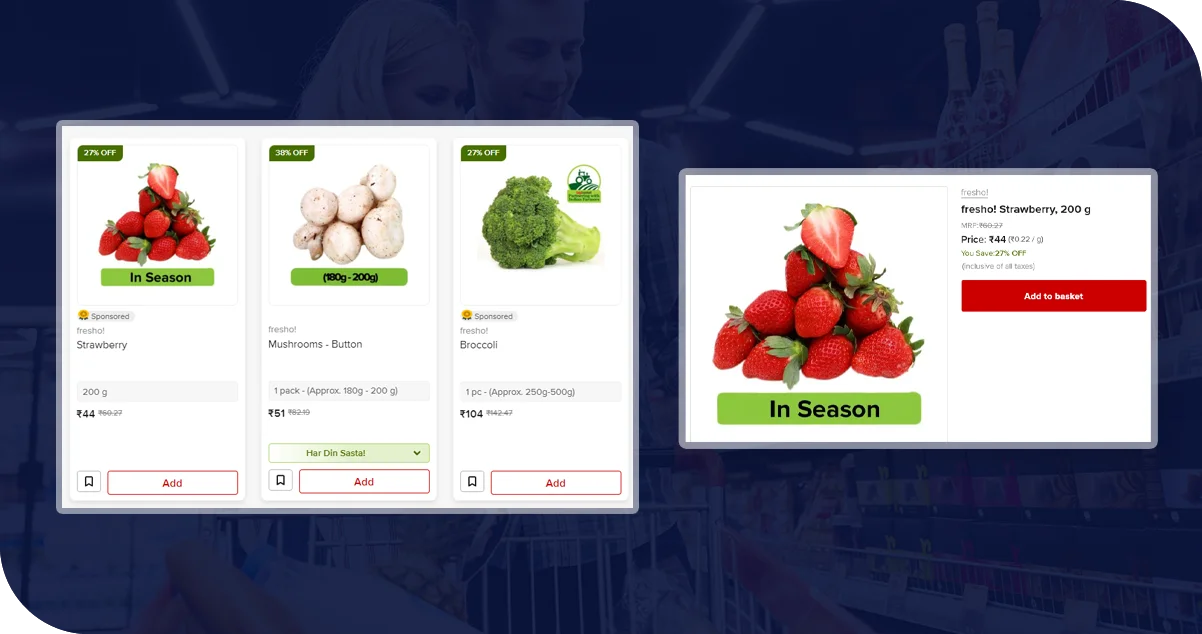

Efficient Data Collection: Our grocery data scraper can accumulate and sort large amounts of data from different grocery store applications without omitting any information.

Consistency and Accuracy: Data validation and verification procedures are strictly applied to all data sources to achieve data consistency and accuracy.

Compliance and Privacy: Our scraper respects the data privacy laws and the terms of service of the stores to ensure legal and ethical means of data harvesting.

Methodologies Used

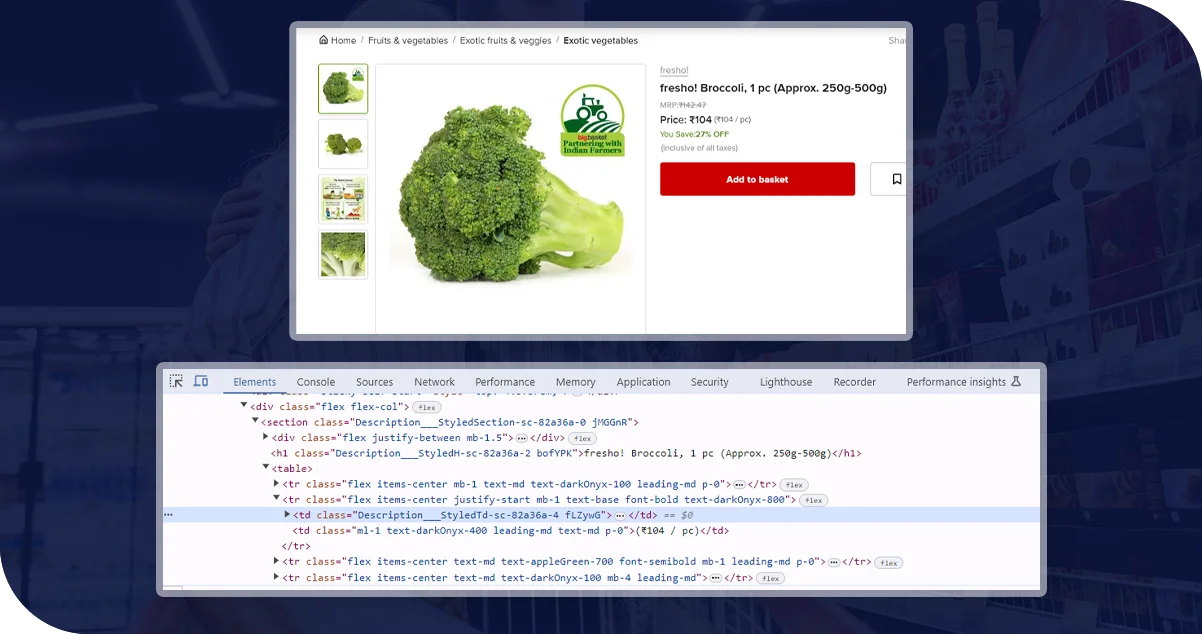

Web Scraping Techniques: As for data collection, we utilized Python and BeautifulSoup for web scraping to improve the rate and accuracy of data collection from the grocery store apps.

Data Parsing and Cleaning: The extracted data was analyzed to check for consistency and then cleaned to remove any inconsistency or mistake.

API Integration: In cases where the stores had an API, we utilized them to pull data in real-time to provide the latest information.

Automation: We set the scraping regularly to ensure the data collected was up-to-date and relevant.

Data Storage and Analysis: The collected data was cleaned and saved in a structured way, enabling the extraction of relevant information for the client.

Advantages of Collecting Data Using Food Data Scrape

Experience and Expertise: Our experience in web scraping makes our solutions accurate and effective.

Customized Solutions: This means that the results will be custom-fitted to your needs when it comes to scraping.

Scalability: Our services are suitable for all types of projects, whether you have a few sources or hundreds.

Data Quality: The goal is to provide clean, accurate, and structured data that can be used for further analysis.

Compliance: All our scraping efforts always comply with the legal and ethical requirements.

Final Outcome: Last but not least, we extracted grocery data from different stores in New York City. We gathered various product information using the advanced approach, such as price, description, and stock availability. After extracting and arranging the data, it is ready for analysis and can be loaded into the client’s systems. Adhering to these practices guarantees that the data is as reliable and relevant to the client’s needs as possible to support their business processes.